Web crawling and web scraping are two essential techniques for extracting data from the internet, but they serve different purposes and operate in distinct ways. Understanding the differences between these methods is crucial for choosing the right approach for your data needs. Below, we’ll break down the key distinctions between web crawling and web scraping.

1. Definition and Purpose

- Web Crawling:

Web crawling involves automatically browsing and indexing web pages across a website or the entire internet. A web crawler (or spider) follows links to discover and collect URLs, often for purposes like search engine indexing or site mapping. - Web Scraping:

Web scraping focuses on extracting specific data from a known webpage or set of webpages. It involves parsing the HTML structure of a page to retrieve targeted information, such as product details, prices, or contact information.

Key Difference:

Crawling is about discovery and indexing, while scraping is about data extraction.

2. Scope and Process

- Web Crawling:

Crawlers traverse websites systematically, often starting from a seed URL and following links to explore new pages. The process is broad and aims to cover as much of the web as possible. - Web Scraping:

Scraping is more focused and typically targets specific pages or datasets. It involves analyzing the page structure to extract the desired information, often using tools like BeautifulSoup or Scrapy.

Key Difference:

Crawling is wide-ranging, while scraping is targeted.

3. Tools and Techniques

- Web Crawling:

Popular tools for crawling include Scrapy, Apache Nutch, and Googlebot. These tools are designed to handle large-scale data collection and indexing. - Web Scraping:

Scraping tools like BeautifulSoup, Selenium, and Pandas are used to extract and process data from specific webpages. These tools are often customized for particular data extraction tasks.

Key Difference:

Crawling tools focus on discovery and indexing, while scraping tools focus on data extraction and parsing.

4. Use Cases

- Web Crawling:

Crawling is commonly used by search engines like Google to index web pages, by businesses to monitor website changes, or by researchers to collect large datasets for analysis. - Web Scraping:

Scraping is used for tasks like price comparison, sentiment analysis, lead generation, and extracting structured data from websites for business intelligence.

Key Difference:

Crawling is ideal for broad data collection, while scraping is suited for specific data extraction[.

5. Legal and Ethical Considerations

Both crawling and scraping must adhere to legal and ethical guidelines. For example:

- Crawling: Ensure compliance with a website’s

robots.txtfile to avoid unauthorized access. - Scraping: Respect copyright laws and avoid overloading servers with excessive requests.

Key Difference:

Crawling often involves indexing publicly available data, while scraping may require permission for extracting specific content.

Key Differences

| Aspect | Web Crawling | Web Scraping |

|---|---|---|

| Purpose | Indexing and discovery | Data extraction |

| Scope | Broad | Narrow |

| Output | Sitemaps, indexes | Structured data (CSV, JSON) |

| Tools | Search engine bots (e.g., Googlebot) | Scraping tools (e.g., BeautifulSoup, Scrapy) |

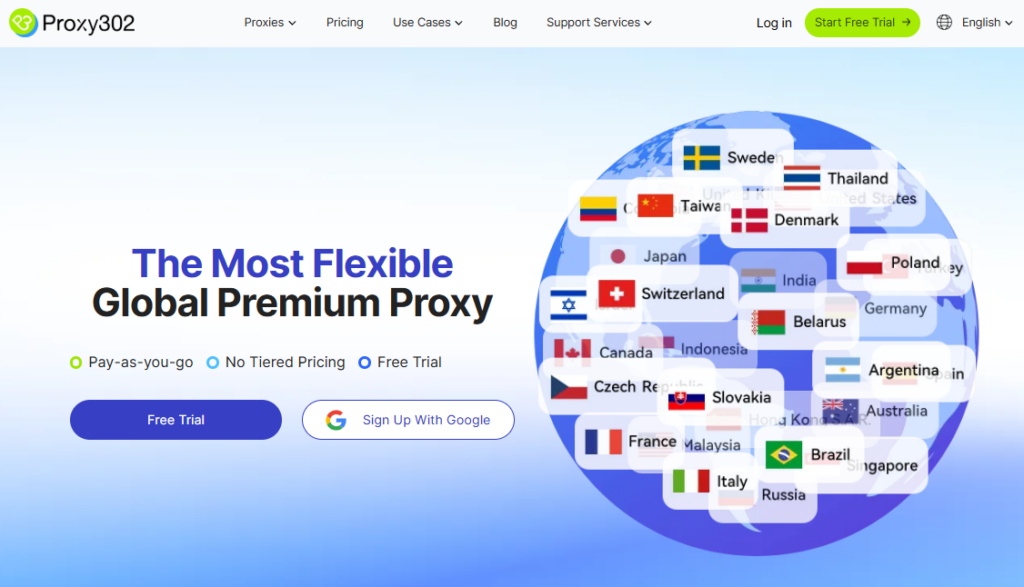

How Proxy302 Enhances Web Crawling and Scraping

Proxy302 is a powerful tool for both web crawling and scraping, offering features that improve efficiency, security, and reliability. Here’s how it helps:

1. Access to Global IPs for Geo-Specific Data

Proxy302 provides access to 65+ million IPs across 195+ countries, enabling crawlers and scrapers to access geo-restricted content. This is particularly useful for tasks requiring data from specific regions, such as local news or regional pricing.

2. Avoiding IP Bans and Rate Limits

By rotating IPs, Proxy302 helps avoid IP bans and rate limits imposed by websites. This ensures uninterrupted crawling and scraping operations, even on heavily protected sites.

3. Enhancing Anonymity and Security

Proxy302 masks your real IP address, ensuring anonymity during data extraction. This protects your identity and prevents websites from blocking your activities.

4. Supporting High-Volume Operations

Proxy302’s robust infrastructure supports high-volume crawling and scraping, making it ideal for large-scale data collection projects. Its static IPs are particularly useful for tasks requiring consistent access to specific websites.

5. Ensuring Data Privacy

With its no-logging policy, Proxy302 ensures that your scraping and crawling activities remain private. This is crucial for protecting sensitive data and complying with privacy regulations.

Conclusion

While web crawling and scraping serve different purposes, both rely on efficient and secure data extraction tools. Proxy302 enhances these processes by providing global IP access, avoiding bans, ensuring anonymity, and supporting high-volume operations. Whether you’re indexing the web or extracting specific data, Proxy302 is a reliable solution for your needs.

👉 Start Your Free Trial Now 👈and unlock a world without digital borders.