Bots are everywhere on the internet. While some bots, like search engine crawlers, are beneficial, others can wreak havoc on websites by scraping data, launching attacks, or committing fraud. So, how do websites block these malicious bots? In this guide, we’ll explore the techniques and tools websites use to detect and block bots, ensuring a safer and smoother online experience.

What Are Bots?

Bots are automated software programs that perform tasks on the internet. They can be classified into two categories:

- Good Bots: These include search engine crawlers (like Googlebot) and chatbots that assist users.

- Bad Bots: These are malicious bots that scrape data, launch DDoS attacks, or commit fraud.

According to a recent report, bad bots accounted for 24% of internet traffic in 2024, making them a significant threat to websites.

Why Block Bots?

Blocking bots is essential for several reasons:

- Protect Sensitive Data: Prevent bots from scraping confidential information.

- Maintain Website Performance: Reduce server load caused by bot traffic.

- Prevent Fraud: Stop bots from creating fake accounts or making fraudulent transactions.

- Enhance User Experience: Ensure genuine users have a seamless experience.

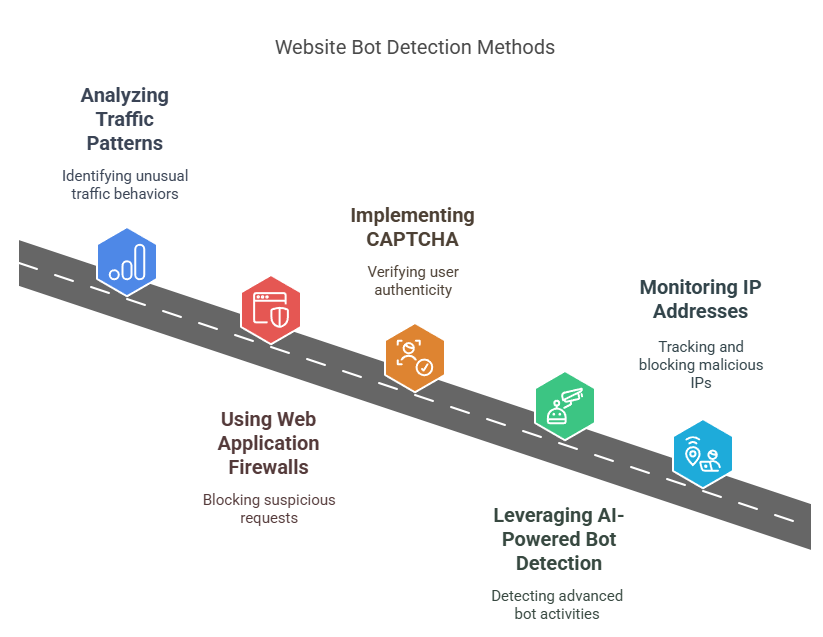

How Websites Detect Bots

1. Analyzing Traffic Patterns

Bots often exhibit unusual traffic patterns, such as:

- High Request Rates: Bots can send thousands of requests per second.

- Repetitive Behavior: Bots may perform the same action repeatedly.

- Non-Human Timing: Bots operate at odd hours or with unnatural speed.

By monitoring these patterns, websites can identify and block bot traffic.

2. Using Web Application Firewalls (WAFs)

Web Application Firewalls (WAFs) are a frontline defense against bots. They filter incoming traffic and block known malicious bots based on predefined rules. For example, WAFs can block IP addresses associated with botnets or data centers.

3. Implementing CAPTCHA

CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart) is a widely used method to block bots. By requiring users to solve puzzles or identify objects, CAPTCHA ensures that only humans can access the website.

4. Leveraging AI-Powered Bot Detection

AI-powered tools, like Imperva Advanced Bot Detection, use machine learning algorithms to identify and block bots. These tools analyze behavior patterns, IP addresses, and other data points to detect even the most sophisticated bots.

5. Monitoring IP Addresses

Websites can block bots by maintaining a database of bot-associated IPs, proxies, and data centers. For example, Proxy302 offers a vast pool of 65M+ global IPs, enabling websites to identify and block suspicious traffic effectively.

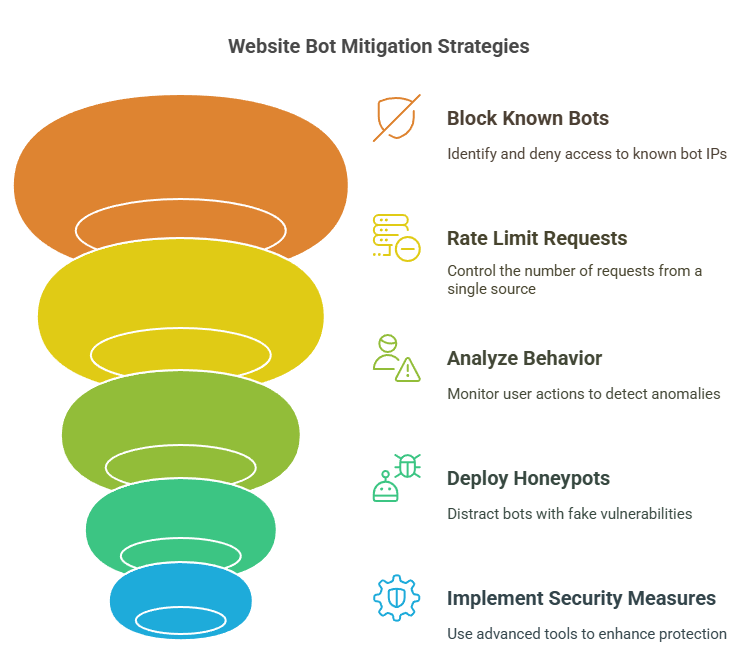

How Websites Block Bots

1. Blocking Known Bot IPs

Websites can use blacklists to block IP addresses associated with malicious bots. Tools like DataDome provide updated databases of bot-associated IPs, ensuring robust protection.

2. Rate Limiting

Rate limiting restricts the number of requests a user (or bot) can make within a specific time frame. For example, a website might allow only 10 requests per second from a single IP address.

3. Behavioral Analysis

By analyzing user behavior, websites can distinguish between humans and bots. For instance, bots often lack mouse movements or exhibit unnatural scrolling patterns.

4. Using Honeypots

Honeypots are hidden elements on a website that are invisible to humans but detectable by bots. When a bot interacts with a honeypot, the website can block it immediately.

5. Implementing Advanced Security Measures

Tools like Cloudflare and Akamai offer advanced bot protection by combining multiple techniques, including AI, machine learning, and real-time monitoring.

Practical Applications of Bot Blocking

Case Study 1: E-Commerce Fraud Prevention

An online retailer used AI-powered bot detection to block bots attempting to create fake accounts and make fraudulent purchases. This reduced fraud by 40% and improved customer trust.

Case Study 2: Protecting Content from Scraping

A news website implemented rate limiting and CAPTCHA to prevent bots from scraping articles. This ensured that only genuine users could access their content.

Case Study 3: Reducing Server Load

A gaming platform used Proxy302’s IP monitoring to block bots overwhelming their servers. This improved website performance and user experience.

Tips for Effective Bot Blocking

- Use Multiple Techniques: Combine CAPTCHA, WAFs, and AI-powered tools for comprehensive protection.

- Monitor Traffic Regularly: Keep an eye on traffic patterns to detect new bot threats.

- Update Security Measures: Stay ahead of bots by regularly updating your detection and blocking tools.

Conclusion

Blocking bots is crucial for protecting your website, maintaining performance, and ensuring a positive user experience. By leveraging advanced tools and techniques, you can stay one step ahead of malicious bots.

Ready to take your bot-blocking to the next level? Proxy302 offers a free trial with access to 65M+ global IPs, city-level targeting, and a flexible pay-as-you-go model. Sign up now and experience the difference!

1 comment

Gooɗ day! I could have sworn I’ve visited this site

before but after looking at some of the articles I realized it’s new to me.

Regardless, I’m certainlү delighted I disϲoveгеd it and I’lⅼ be Ƅookmarking it and checking back often!